Transfer learning is revolutionizing artificial intelligence by enabling machines to leverage existing knowledge, dramatically reducing the data and time required to train new models effectively.

🚀 The Foundation: Understanding Transfer Learning in Modern AI

In the rapidly evolving landscape of artificial intelligence, one of the most significant challenges organizations face is the requirement for massive datasets to train effective machine learning models. Traditional approaches demand thousands or even millions of labeled examples, making AI development expensive, time-consuming, and often prohibitive for smaller organizations or specialized applications.

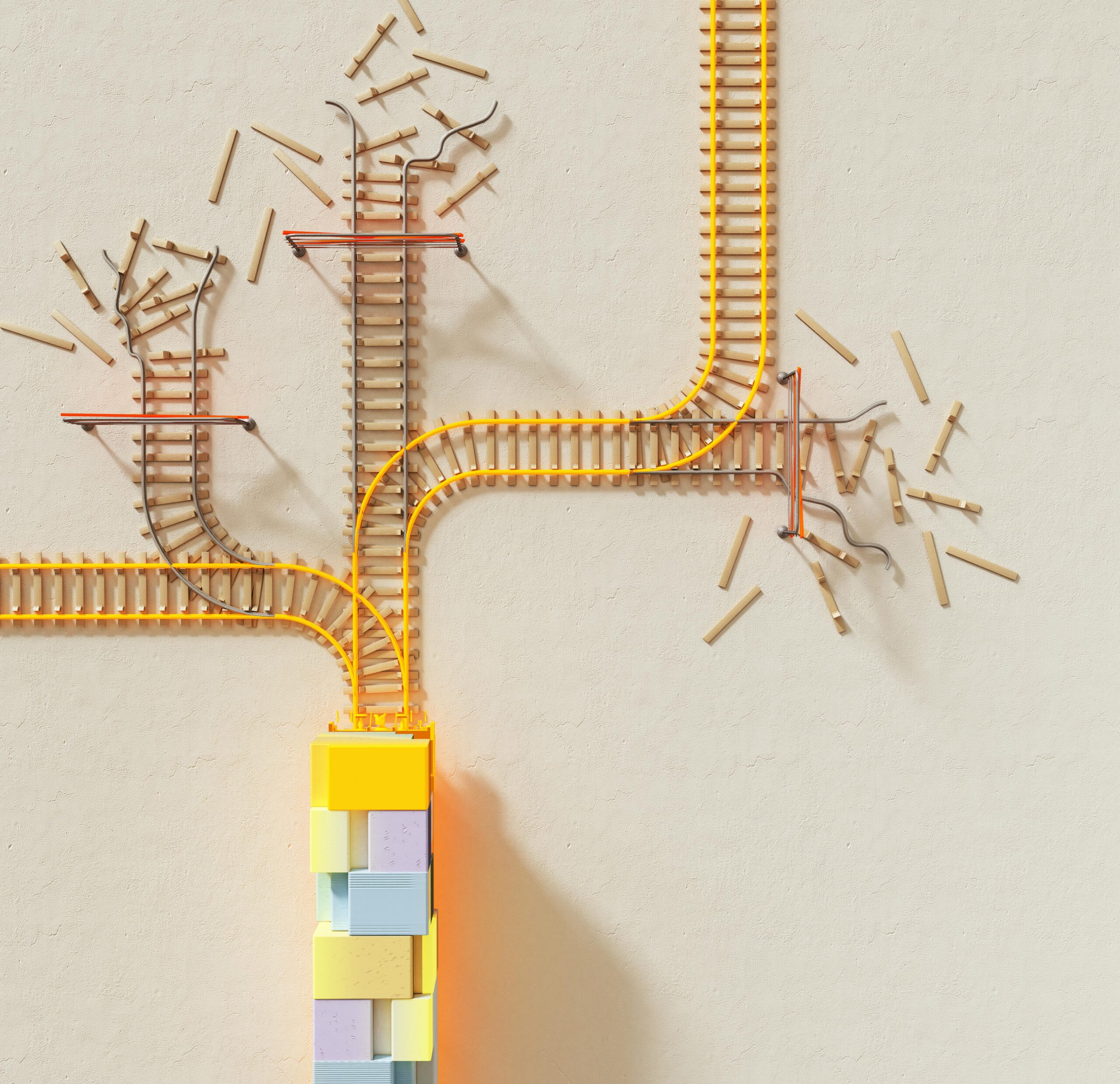

Transfer learning emerges as a game-changing paradigm that fundamentally alters this equation. Instead of training neural networks from scratch, transfer learning allows developers to take pre-trained models—already educated on vast datasets—and adapt them to new, specific tasks with minimal additional data. This approach mirrors human learning: we don’t relearn basic concepts every time we encounter a new situation; instead, we apply existing knowledge to novel contexts.

The concept has roots in cognitive science and educational psychology, where knowledge transfer has long been recognized as a fundamental aspect of human intelligence. When applied to machine learning, this principle enables AI systems to achieve remarkable performance even when facing data scarcity, a condition that would traditionally cripple conventional training approaches.

💡 Why Traditional Machine Learning Falls Short

Before diving deeper into transfer learning, it’s essential to understand why traditional machine learning approaches struggle with limited data scenarios. Classical supervised learning operates on a simple premise: the more examples a model sees during training, the better it becomes at recognizing patterns and making accurate predictions.

However, this data-hungry nature creates several critical bottlenecks. First, collecting and labeling large datasets requires substantial human effort and financial investment. Medical imaging, for instance, requires expert radiologists to annotate thousands of scans—a process that can take months or years. Second, many specialized domains simply don’t generate enough data to satisfy traditional training requirements. Rare diseases, emerging technologies, or niche industrial applications often have inherently limited datasets.

Third, privacy concerns and regulatory frameworks increasingly restrict access to large-scale data, particularly in sensitive sectors like healthcare and finance. Finally, training deep neural networks from scratch demands enormous computational resources, translating to significant energy consumption and carbon footprint—concerns that are becoming increasingly important in sustainable AI development.

🎯 How Transfer Learning Solves the Data Dilemma

Transfer learning addresses these challenges through a elegant solution: knowledge reuse. The approach typically involves two distinct phases. During the pre-training phase, a model is trained on a large, general dataset—often containing millions of examples. This foundational training helps the network learn universal features and representations applicable across various tasks.

The second phase, fine-tuning, adapts this pre-trained model to a specific target task using a much smaller dataset. Instead of learning everything from scratch, the model only needs to adjust its higher-level representations to accommodate the nuances of the new task. The lower layers, which typically capture fundamental features like edges, textures, or basic patterns, remain largely unchanged.

This strategy proves remarkably effective because many AI tasks share underlying structural similarities. In computer vision, for example, whether you’re identifying cats, cars, or cancerous cells, the basic visual features—edges, corners, textures—remain relevant. A model pre-trained on ImageNet (containing over 14 million images across thousands of categories) has already learned these fundamental visual concepts and can rapidly adapt to specialized image recognition tasks.

🔧 Practical Implementations: From Theory to Application

Transfer learning manifests in several distinct approaches, each suited to different scenarios and constraints. The most straightforward method is feature extraction, where the pre-trained model serves as a fixed feature extractor. The convolutional base remains frozen, and only a new classifier is trained on top of the extracted features. This approach works exceptionally well when the target dataset is small and similar to the original training data.

Fine-tuning represents a more flexible approach, where some or all layers of the pre-trained network are unfrozen and continue training on the new dataset, typically with a very low learning rate. This method allows the model to adapt more deeply to the target domain while avoiding catastrophic forgetting—the tendency to lose previously learned information.

Domain adaptation takes transfer learning further by explicitly addressing the distributional differences between source and target domains. Techniques like adversarial training help align feature representations across domains, enabling models to generalize effectively even when source and target data have significant differences in style, context, or characteristics.

📊 Real-World Success Stories Across Industries

The practical impact of transfer learning extends across virtually every sector where AI finds application. In healthcare, transfer learning has enabled the development of diagnostic tools with unprecedented speed. Researchers have successfully adapted models pre-trained on natural images to detect diabetic retinopathy, identify skin cancer, and analyze chest X-rays for COVID-19—all with datasets orders of magnitude smaller than would traditionally be required.

The manufacturing sector leverages transfer learning for quality control and defect detection. Pre-trained vision models can be quickly adapted to identify defects in specialized products, even when defect examples are scarce. This capability dramatically reduces the time from production line deployment to operational AI systems, from months to mere days or weeks.

Natural language processing has perhaps seen the most transformative impact. Models like BERT, GPT, and their successors are pre-trained on massive text corpora and then fine-tuned for specific tasks—sentiment analysis, question answering, translation, or text generation—with minimal task-specific data. This approach has democratized NLP, making sophisticated language understanding accessible to organizations without massive computational budgets.

In agriculture, transfer learning enables farmers to deploy crop disease detection systems customized to their specific crops and regional conditions without requiring thousands of labeled images. Environmental conservation projects use adapted models to identify endangered species from camera trap images, even when examples are limited.

🛠️ Choosing the Right Pre-Trained Model

Success with transfer learning begins with selecting an appropriate foundation model. Several factors should guide this decision. First, consider the similarity between the pre-training task and your target application. Models trained on similar data distributions generally transfer more effectively. For medical imaging, models pre-trained on medical datasets typically outperform those trained solely on natural images.

Model architecture matters significantly. Larger, more complex architectures like ResNet, EfficientNet, or Vision Transformers capture richer representations but require more computational resources during fine-tuning. Smaller architectures like MobileNet offer efficiency advantages for deployment on resource-constrained devices while still providing substantial transfer learning benefits.

The availability of pre-trained weights is another practical consideration. Frameworks like TensorFlow and PyTorch provide extensive model zoos with pre-trained weights for popular architectures. Community repositories like Hugging Face have democratized access to state-of-the-art pre-trained models across multiple domains, significantly lowering the barrier to entry for transfer learning adoption.

⚡ Optimizing the Fine-Tuning Process

Effective fine-tuning requires careful attention to hyperparameters and training strategies. Learning rate selection is particularly critical—too high, and you risk destroying the valuable pre-trained features; too low, and training becomes unnecessarily slow or gets stuck in suboptimal solutions. A common strategy employs discriminative fine-tuning, where different layers use different learning rates, with earlier layers (closer to input) having lower rates than later layers.

Data augmentation becomes even more important when working with limited datasets. Techniques like rotation, scaling, color jittering, and mixup help the model generalize better by artificially expanding the effective training set diversity. However, augmentation strategies should reflect realistic variations in the target domain—augmentations that create unrealistic samples can harm rather than help performance.

Regularization techniques help prevent overfitting, a constant risk when fine-tuning on small datasets. Dropout, weight decay, and early stopping all play important roles in maintaining generalization performance. Progressive unfreezing—gradually unfreezing layers from top to bottom during training—represents another effective strategy that allows the model to adapt incrementally while preserving valuable pre-trained features.

🌐 Transfer Learning Beyond Computer Vision

While computer vision pioneered much of transfer learning’s practical application, the paradigm has expanded dramatically into other domains. In audio processing, models pre-trained on large speech or music datasets transfer effectively to specialized tasks like rare language speech recognition, medical sound analysis, or wildlife acoustic monitoring.

Reinforcement learning has embraced transfer learning to address its notoriously sample-inefficient nature. Agents trained in simulation environments can transfer learned policies to real-world robotics tasks, dramatically reducing the physical training time and associated costs. Similarly, policies learned in one game or environment can bootstrap learning in related but distinct scenarios.

Time series analysis, crucial for applications ranging from financial forecasting to predictive maintenance, benefits from transfer learning when historical data is limited. Models trained on related time series can provide valuable inductive biases that accelerate learning on new, data-scarce sequences.

Graph neural networks, used for analyzing molecular structures, social networks, and knowledge graphs, increasingly leverage transfer learning. Pre-training on large graph databases helps models learn fundamental structural patterns that generalize across diverse graph-structured data.

🔍 Addressing Challenges and Limitations

Despite its tremendous benefits, transfer learning is not a panacea. Negative transfer occurs when pre-trained knowledge actually harms performance on the target task—typically when source and target domains are too dissimilar or when the pre-trained model has learned biases incompatible with the new task. Careful evaluation and sometimes trying multiple source models helps mitigate this risk.

Computational requirements, while reduced compared to training from scratch, can still be substantial. Fine-tuning large models like Vision Transformers or GPT-class language models requires significant GPU resources. However, techniques like adapter modules, which add small trainable components to frozen pre-trained models, offer promising solutions for resource-constrained scenarios.

Bias inheritance represents another concern. Pre-trained models carry biases present in their training data, which can propagate to downstream applications. When adapting models for sensitive applications—hiring decisions, loan approvals, medical diagnoses—careful bias auditing and mitigation strategies become essential ethical requirements.

The black-box nature of deep learning compounds when using transfer learning. Understanding why a fine-tuned model makes particular decisions becomes more complex when the foundational knowledge comes from pre-training on datasets you may not fully understand. Explainability techniques and thorough testing across diverse scenarios help address these transparency challenges.

🎓 Best Practices for Implementation Success

Organizations embarking on transfer learning initiatives should follow several best practices to maximize success probability. Start with a clear understanding of your target task and dataset characteristics. Document the domain, data volume, label quality, and specific performance requirements. This foundation informs all subsequent decisions about model selection and training strategy.

Establish robust evaluation protocols early. Hold-out test sets should reflect real-world deployment conditions, including edge cases and challenging scenarios. Cross-validation helps ensure results aren’t artifacts of particular data splits. Track multiple metrics beyond simple accuracy—precision, recall, F1-score, and domain-specific measures provide more complete performance pictures.

Invest in data quality over quantity. When working with limited data, each example’s quality becomes crucial. Careful labeling, expert review, and cleaning processes pay substantial dividends in final model performance. Consider active learning strategies where the model identifies the most informative examples for human labeling, maximizing learning efficiency.

Maintain version control not just for code but for models, datasets, and hyperparameters. Experiment tracking tools help organize the inevitable proliferation of training runs and ensure reproducibility. This discipline becomes especially important when transitioning models from research to production environments.

🚀 The Future Landscape: What’s Coming Next

Transfer learning continues evolving rapidly, with several exciting directions emerging. Foundation models—extremely large models pre-trained on diverse, massive datasets—promise increasingly effective transfer to downstream tasks with minimal fine-tuning. These models aim to capture such comprehensive general knowledge that they can adapt to virtually any specific task with just a handful of examples or even zero-shot (no examples at all).

Multi-modal transfer learning, where models pre-trained on multiple data types (images, text, audio) simultaneously, enables richer representations and more versatile applications. Models like CLIP, which learns joint image-text representations, demonstrate how multi-modal pre-training enables entirely new capabilities like zero-shot image classification from text descriptions.

Federated transfer learning addresses privacy concerns by enabling collaborative model training across distributed datasets without centralizing sensitive data. This approach holds particular promise for healthcare and financial applications where data sharing faces regulatory constraints.

Automated machine learning (AutoML) increasingly incorporates transfer learning, automatically selecting appropriate pre-trained models and optimizing fine-tuning strategies. This democratization makes sophisticated AI accessible to practitioners without deep machine learning expertise.

💼 Strategic Considerations for Organizations

For businesses considering transfer learning adoption, several strategic factors warrant consideration. The technology dramatically reduces the data barrier to AI deployment, but success still requires domain expertise to frame problems appropriately, evaluate results critically, and integrate models into operational workflows.

Build or partner for capability development. While transfer learning reduces technical barriers, teams still need machine learning engineers familiar with modern frameworks, best practices, and troubleshooting. Partnerships with specialized AI vendors or consultants can accelerate initial deployments while internal capabilities develop.

Start with high-value, well-scoped pilot projects. Transfer learning excels when applied to specific, well-defined problems where even modest performance improvements deliver measurable business value. Success with focused pilots builds organizational confidence and expertise for more ambitious initiatives.

Consider ethical implications proactively. Transfer learning inherits biases from pre-training data and can amplify them in sensitive applications. Establish governance frameworks, bias testing protocols, and ethical review processes before deployment, especially for applications affecting individuals or communities.

🎯 Unlocking Your AI Potential with Transfer Learning

Transfer learning represents more than just a technical advancement—it’s a fundamental shift in how we approach artificial intelligence development. By enabling effective learning from limited data, it democratizes AI, making sophisticated capabilities accessible to organizations of all sizes and across all domains.

The path from concept to deployed AI solution has shortened dramatically. What once required teams of specialists, months of data collection, and substantial computational budgets can now often be accomplished with smaller teams, minimal data, and modest resources—without sacrificing performance quality.

As pre-trained models become more capable and diverse, as fine-tuning techniques become more efficient, and as tools become more accessible, transfer learning’s impact will only grow. Organizations that master this approach position themselves to respond rapidly to new challenges, experiment with innovative applications, and maintain competitive advantages in increasingly AI-driven markets.

The power of transfer learning lies not just in what it enables today, but in the future it makes possible. By dramatically lowering barriers to AI adoption, it accelerates the pace of innovation, broadens participation in AI development, and brings intelligent systems to domains and applications previously beyond reach. For anyone serious about leveraging artificial intelligence effectively, understanding and implementing transfer learning has become not optional, but essential.

Toni Santos is a vibration researcher and diagnostic engineer specializing in the study of mechanical oscillation systems, structural resonance behavior, and the analytical frameworks embedded in modern fault detection. Through an interdisciplinary and sensor-focused lens, Toni investigates how engineers have encoded knowledge, precision, and diagnostics into the vibrational world — across industries, machines, and predictive systems. His work is grounded in a fascination with vibrations not only as phenomena, but as carriers of hidden meaning. From amplitude mapping techniques to frequency stress analysis and material resonance testing, Toni uncovers the visual and analytical tools through which engineers preserved their relationship with the mechanical unknown. With a background in design semiotics and vibration analysis history, Toni blends visual analysis with archival research to reveal how vibrations were used to shape identity, transmit memory, and encode diagnostic knowledge. As the creative mind behind halvoryx, Toni curates illustrated taxonomies, speculative vibration studies, and symbolic interpretations that revive the deep technical ties between oscillations, fault patterns, and forgotten science. His work is a tribute to: The lost diagnostic wisdom of Amplitude Mapping Practices The precise methods of Frequency Stress Analysis and Testing The structural presence of Material Resonance and Behavior The layered analytical language of Vibration Fault Prediction and Patterns Whether you're a vibration historian, diagnostic researcher, or curious gatherer of forgotten engineering wisdom, Toni invites you to explore the hidden roots of oscillation knowledge — one signal, one frequency, one pattern at a time.